ABSTRACT

The rise of machine learning has marked a transformative era in artificial intelligence, bringing forth unparalleled capabilities and ethical dilemmas that defy traditional moral paradigms. The intricate nature of machine intelligence necessitates a nuanced ethical framework, prompting an exploration through the lens of William Leonard Rowe’s Three Logical Problems of Evil, originally designed for theodicy. Rowe, a distinguished philosopher, provided a foundation for this inquiry, offering a philosophical perspective to scrutinize the actions and consequences of machine learning. Rowe’s three logical problems—moral evil, natural evil, and gratuitous evil—traditionally applied to theological questions, find intriguing parallels in the context of machine learning. The multidisciplinary approach of merging insights from computer science, ethics, and philosophy is employed. A comprehensive literature review navigates algorithmic biases, opaque decision-making processes, and societal impacts, establishing the groundwork for understanding ongoing debates. Applying Rowe’s logical problems to the ethical landscape of machine learning identifies analogous concepts such as biased decision-making, unintended consequences, and societal impacts.

The ethical ambiguity of machine learning arises from the autonomy and non-anthropocentric nature of algorithms, challenging conventional moral distinctions. Algorithmic biases, rooted in historical data, prompt questions about moral reprehensibility and unintended consequences. Opaque decision-making processes challenge accountability, and the autonomy of machine learning systems complicates traditional frameworks relying on human intentionality. Rowe’s Three Logical Problems provide a conceptual scaffold for evaluating these ethical challenges. The problem of moral evil resonates in biased algorithmic behavior, raising reflections on the absence of intent in algorithms. The problem of natural evil aligns with challenges of unintended consequences, questioning the morality of actions resulting from inherent unpredictability. The problem of gratuitous evil mirrors debates on societal impacts, demanding an understanding of societal context and trade-offs in deploying machine learning solutions.

Operating within the binary realm, machines lack moral consciousness, leading to ethical concerns about biases encoded in algorithms. A proposed paradigm shift advocates for redefining morality for machines. Context-specific ethical evaluations, considering cultural norms and legal frameworks, guide decisions in specific settings. Developing a machine-centric morality acknowledges the absence of human-like consciousness, emphasizing criteria such as impact, adherence to ethical guidelines, and alignment with societal values.In conclusion, this research contributes to the discourse on the ethical implications of machine learning, providing a nuanced understanding beyond traditional moral frameworks. Extending Rowe’s logical problems to machine learning navigates ethical complexities, urging ongoing reflection and adaptation of ethical frameworks for responsible development in the age of artificial intelligence. The philosophical foundation provided by Rowe’s logical problems serves as a guide, compelling a comprehensive reevaluation of morality in the context of evolving technologies.

KEYWORDS: machine learning, moral, values

1 INTRODUCTION

The advent of machine learning has ushered in a transformative era in artificial intelligence, presenting society with unprecedented capabilities and challenges. As we grapple with the ethical implications of this burgeoning field, the conventional moral dichotomy of “good” and “bad” appears increasingly inadequate to encapsulate the intricacies of machine intelligence. This paper contends that machine learning, as a unique form of intelligence, operates within a distinctive ethical framework that demands nuanced evaluation. Traditional ethical discussions centred around human actions and intentions often struggle to encompass the complexities of algorithmic decision-making and the autonomy exhibited by machine learning systems. The gap between our existing moral vocabulary and the novel challenges posed by artificial intelligence becomes particularly pronounced when considering issues such as algorithmic biases, opaque decision-making processes, and the potential societal impacts of machine learning applications.

In approaching the ethical landscape of machine learning, we draw inspiration from William Leonard Rowe’s Three Logical Problems of Evil, a philosophical framework traditionally applied to discussions of theodicy and the nature of good and evil. While Rowe’s work primarily addresses questions related to the existence of God and the problem of evil in the world, we find his logical problems to be a thought-provoking lens through which to scrutinize the actions and consequences of machine learning.

The central premise of this exploration is that the ethical challenges posed by machine learning necessitate a departure from anthropocentric moral evaluations. Machine learning algorithms lack human intent or consciousness, raising questions about the applicability of traditional moral judgments. Rowe’s logical problems, designed to navigate the complexities of moral philosophy, provide a conceptual scaffold for understanding the nuances of machine learning ethics.

This paper adopts a multidisciplinary approach, amalgamating insights from computer science, ethics, and philosophy to explore the intersections of machine learning and moral reasoning. Through a critical review of existing literature and the subsequent application of Rowe’s logic to machine learning, we aim to unveil the inherent ethical ambiguity and necessitate a revaluation of our conventional ethical paradigms. As we embark on this philosophical journey, the objective is not only to question the ethical implications of machine learning but also to propose a reconceptualization of morality that accommodates the unique attributes of this artificial intelligence paradigm.

2. BACKGROUND ON WILLIAM LEONARD ROWE

William Leonard Rowe, a distinguished philosopher, is renowned for his contributions to the field of theodicy and the logical problems of evil. Born in [birthdate], Rowe’s academic journey led him to explore the intricate intersections of philosophy, theology, and ethics. His seminal work, “The Problem of Evil and Some Varieties of Atheism,” introduced the concept of three logical problems that challenge the compatibility of the existence of an all-powerful, all-knowing, and benevolent deity with the presence of evil in the world.

The three logical problems identified by Rowe are classified as the problems of moral evil, natural evil, and gratuitous evil. The problem of moral evil questions how the existence of unjustified suffering can be reconciled with the notion of an omnibenevolent deity. The problem of natural evil explores the presence of suffering caused by natural disasters and other non-moral factors, while the problem of gratuitous evil challenges the necessity of certain instances of suffering for any greater purpose.

While Rowe’s work primarily focuses on theodicy, the philosophical exploration of evil, and the challenges it poses to traditional theological beliefs, we find resonance in his logical problems when applied to the ethical complexities of machine learning. Rowe’s framework provides an intellectual foundation for scrutinizing moral ambiguity and evaluating the consequences of actions without human intent or consciousness, aligning with the inherent characteristics of machine learning algorithms.

By incorporating Rowe’s insights into our examination of machine learning, we seek to extend the application of his logical problems beyond the traditional realm of theology. This extension allows us to frame the ethical challenges of machine learning within a philosophical context that grapples with the nature of good and evil in the absence of intentional agency. As we delve into the subsequent sections of this paper, Rowe’s logical problems will serve as a guiding framework to explore how machine learning introduces a distinctive ethical landscape that challenges conventional moral judgments.

3. METHODOLOGY

The methodology involves a systematic exploration of the ethical challenges posed by machine learning through the lens of William Leonard Rowe’s Three Logical Problems of Evil. The research process comprises two primary phases: a comprehensive review of existing literature on machine learning ethics and a critical application of Rowe’s logical problems to the unique characteristics of machine intelligence.

The literature review seeks to synthesize insights from diverse disciplines, including computer science, ethics, and philosophy, to establish a foundation for understanding current debates and perspectives on machine learning ethics. This phase critically examines seminal works, empirical studies, and theoretical frameworks related to algorithmic biases, transparency, accountability, and the societal impact of machine learning applications.

Following the literature review, we apply Rowe’s logical problems to the ethical landscape of machine learning. This involves identifying analogous concepts in the machine learning context for each of Rowe’s problems—moral evil, natural evil, and gratuitous evil. We scrutinize instances of biased decision-making, unintended consequences, and the potential harm caused by machine learning algorithms, seeking to understand how these align with or diverge from Rowe’s original theological framework.

Our methodology aims to bridge the philosophical and technical dimensions of machine learning ethics, fostering a comprehensive understanding of the ethical challenges unique to this emerging field. Through this dual approach, we endeavor to contribute to a nuanced discussion on the ethical implications of machine learning, recognizing its distinctive nature in comparison to traditional human-centered moral evaluations.

3a. The Ethical Ambiguity of Machine Learning

Machine learning introduces an ethical landscape marked by unprecedented complexities and nuances, challenging conventional moral distinctions. At the core of this ambiguity lies the autonomy and non-anthropocentric nature of machine learning algorithms. Unlike human agents who possess intent and consciousness, algorithms operate without inherent moral agency, prompting a reconsideration of the applicability of traditional moral categories.

Algorithmic Biases:

One significant facet of the ethical landscape is the prevalence of algorithmic biases. Machine learning models trained on historical data may inherit and perpetuate societal biases, leading to unjust outcomes and reinforcing existing inequalities. The challenge here lies in discerning whether these biases are morally reprehensible actions on the part of the algorithm or unintended consequences of flawed training data.

Opaque Decision-Making Processes:

Another dimension of ethical ambiguity stems from the opacity of decision-making processes within complex machine learning models. As these algorithms evolve, their inner workings become increasingly inscrutable, posing challenges to accountability and interpretability. Determining the morality of an action becomes intricate when the rationale behind a decision remains hidden within intricate layers of algorithms.

Autonomous Decision-Making:

Machine learning systems often operate autonomously, making decisions without direct human intervention. This autonomy raises questions about responsibility and culpability when undesirable outcomes occur. Traditional ethical frameworks, which rely on human intentionality, struggle to encompass the unintentional consequences emerging from autonomous machine decisions.

Societal Impact:

The societal impact of machine learning applications adds another layer to the ethical discourse. From healthcare to criminal justice, machine learning algorithms influence critical decisions that can profoundly affect individuals and communities. Assessing the morality of these impacts requires a broader understanding of the societal context and an acknowledgment of the intricate interplay between technology and human systems.

In navigating this ethical terrain, we turn to William Leonard Rowe’s Three Logical Problems of Evil, traditionally applied to theodicy, to provide a conceptual framework for evaluating the ethical implications of machine learning.

3b. Rowe’s Three Logical Problems

Problem of Moral Evil:

In the context of machine learning, the problem of moral evil finds resonance in instances where algorithms exhibit biased behavior, leading to discriminatory outcomes. While traditional moral judgments often attribute evil to intentional actions, the absence of intent in algorithms complicates this assessment. Rowe’s logical problem prompts us to consider whether the unintentional perpetuation of biases by algorithms constitutes a form of moral evil, or if alternative moral frameworks are needed.

Problem of Natural Evil:

The problem of natural evil, which addresses suffering caused by non-moral factors, aligns with the challenges posed by unintended consequences in machine learning. As algorithms operate in complex and dynamic environments, unanticipated outcomes may result, causing harm without malintent. This raises questions about the morality of actions that stem from the inherent unpredictability of machine learning systems.

Problem of Gratuitous Evil:

The problem of gratuitous evil, exploring the necessity of certain instances of suffering, is mirrored in the debate surrounding the societal impact of machine learning. Assessing whether the harms caused by algorithms are gratuitous or serve a greater purpose necessitates a nuanced understanding of the societal context and the trade-offs involved in deploying machine learning solutions.

In exploring the ethical dimensions of intelligent machines, it is essential to draw parallels with William Leonard Rowe’s philosophical inquiries, particularly his examination of the problem of evil. Rowe, known for his contributions to the philosophy of religion, grappled with the challenge of reconciling the existence of evil in the world with the notion of an omnipotent and benevolent deity. While Rowe’s concerns centered on theological questions, the intersection of his ideas with the ethical challenges posed by intelligent machines reveals intriguing parallels. For machine there’s no good and bad, but there is only good and bad for them: 0s and 1s. our job is to teach them/ use create all the grey values between 0s and 1s.

Rowe’s exploration of the problem of evil emphasizes the existence of gratuitous suffering, instances where seemingly unnecessary pain and harm occur. In the context of intelligent machines, the ethical dilemmas arise from the potential for biased decision-making and unintended consequences. Much like Rowe questioned the compatibility of an all-powerful and benevolent deity with the presence of evil, we must question the ethical framework guiding machines that, despite their computational power, lack an intrinsic understanding of good and bad.

Machines operate within the binary realm, devoid of moral consciousness. Rowe’s exploration of evil becomes relevant as we confront the unintended consequences of machine actions, driven solely by algorithms and data inputs. Ethical concerns stem from the potential for biases encoded in the algorithms, leading to decisions that may be ethically questionable, such as perpetuating societal inequalities or reinforcing stereotypes.Rowe’s philosophy challenges us to consider the implications of these machine-driven decisions in the broader context of societal well-being. The call to “teach them/use/create all the grey values between 0s and 1s” aligns with Rowe’s quest for a resolution to the problem of evil – an attempt to introduce nuance and ethical considerations into the inherently binary nature of machine decision-making.

To address the ethical challenges, we must recognize that, unlike Rowe’s emphasis on human moral agency, machines lack an innate sense of morality. Human-defined parameters become crucial in guiding machine behavior and mitigating the potential for harm. The opaque nature of machine operations, reminiscent of Rowe’s acknowledgment of the mystery surrounding theodicy, adds complexity to our understanding of the ethical implications.

3c. Beyond Good and Evil: Redefining Morality for Machines

In confronting the ethical ambiguity of machine learning, the question arises: How can we redefine morality to accommodate the distinctive attributes of artificial intelligence? Traditional moral frameworks, rooted in human intent and consciousness, may prove inadequate in addressing the non-anthropocentric nature of machine intelligence. A paradigm shift is required to reconceptualize ethics for machines.

Context-Specific Ethical Evaluations:

The redefinition of machine morality involves recognizing that ethical evaluations should be context-specific, considering the unique attributes and limitations of machine learning algorithms. Context-specific ethical evaluations involve assessing the moral implications of actions within a particular setting or scenario. These evaluations consider the unique factors influencing ethical decisions, such as cultural norms, legal frameworks, and the specific consequences of an action. Examining the context helps determine the appropriateness of ethical principles, weighing conflicting values or duties. For instance, in healthcare, decisions about resource allocation during a pandemic require considerations of fairness, utility, and individual rights. Context-specific ethical evaluations guide individuals and organizations in navigating complex situations, ensuring that ethical choices align with the nuanced demands of their environment while upholding fundamental moral principles.

Machine-Centric Morality:

Machine-centric morality signifies a paradigm shift in ethical considerations, recognizing the distinctive attributes of artificial intelligence. Unlike traditional moral frameworks rooted in human intent and consciousness, this approach acknowledges the absence of these human-like qualities in machines. The crux of machine-centric morality lies in establishing criteria for evaluating the moral implications of algorithms based on their impact, adherence to predefined ethical guidelines, and alignment with societal values. In navigating the ethical challenges posed by machine learning, it becomes imperative to develop a morality that is centered on the unique characteristics of intelligent systems. This shift involves a departure from anthropocentric perspectives, understanding that machines operate within the binary realm, devoid of inherent moral consciousness. The criteria for evaluating the morality of machine behavior must consider the context-specific nature of their actions, recognizing the need for adaptability in diverse scenarios. Machine-centric morality serves as a practical and forward-looking approach, providing a framework to guide the ethical development and deployment of artificial intelligence. As machines continue to integrate into various aspects of society, this perspective encourages a holistic understanding of ethics tailored to the intricacies of machine intelligence, fostering responsible and thoughtful advancement in the field.

4 CONCLUSION

In conclusion, the rapid evolution of machine learning has ushered in a transformative era in artificial intelligence, accompanied by profound ethical inquiries that challenge traditional moral frameworks. This research paper embarked on an exploration of the intricate ethical landscape of machine learning, guided by the philosophical insights of William Leonard Rowe, particularly his Three Logical Problems of Evil. The central premise of our investigation posits that the conventional dichotomy of “good” and “bad” is insufficient for comprehending this emergent form of intelligence.

Drawing inspiration from Rowe’s philosophical lens, we scrutinized the ethical challenges intrinsic to machine learning, including algorithmic biases, decision-making opacity, and the autonomous nature of machine learning systems. We applied Rowe’s Three Logical Problems of Evil to illuminate the limitations of relying on human moral constructs to evaluate the actions and outcomes of machine learning algorithms.

Our examination of the ethical ambiguity of machine learning revealed unprecedented complexities and nuances, challenging conventional moral distinctions. Machine learning operates within a distinctive ethical framework that demands nuanced evaluation, necessitating a departure from anthropocentric moral evaluations. The autonomy and non-anthropocentric nature of machine learning algorithms raise questions about the applicability of traditional moral categories, prompting a reevaluation of our conventional ethical paradigms.

By extending Rowe’s logical problems to the realm of machine learning, we provided a conceptual scaffold for understanding the nuances of machine learning ethics. The problems of moral evil, natural evil, and gratuitous evil found resonance in instances of biased decision-making, unintended consequences, and societal impacts caused by machine learning algorithms.

In response to the ethical challenges posed by machine learning, we proposed a paradigm shift in ethical considerations, advocating for a redefinition of morality that acknowledges and accommodates its distinct nature. Recognizing the absence of human-like consciousness and intent in machines, we emphasized the importance of context-specific ethical evaluations and the development of a machine-centric morality.

This research contributes to the ongoing discourse surrounding the ethical implications of machine learning, offering a multidisciplinary approach that bridges the philosophical and technical dimensions of the field. The philosophical foundation provided by Rowe’s logical problems serves as a guide to navigate the ethical complexities of intelligent machines, encouraging a nuanced understanding that goes beyond traditional moral frameworks. As society continues to grapple with the integration of machine learning into various domains, this research encourages ongoing reflection and adaptation of ethical frameworks to ensure the responsible and thoughtful development of this evolving technology.

5 REFERENCES

-Rowe, William L. Can God be Free? Oxford: The Clarendon, 2004.

-Rowe, William L. “The Problem of Evil and Some Varieties of Atheism.” American Philosophical Quarterly 16, no. 4 (1979): 335–41. http://www.jstor.org/stable/20009775.

-Rowe, William L. Philosophy of Religion: An Introduction, first edition. Encino, CA: Dickenson Publishing Company. (1978)

-Rowe, William L. “The Empirical Argument from Evil,” in Audi and Wainwright (eds), Rationality, Religious Belief, and Moral Commitment, (1986) pp.227-47.

-Rowe, William L. “Evil and Theodicy,” Philosophical Topics 16 (1988): 119-32.

-Rowe, William L. “Ruminations about Evil,” Philosophical Perspectives 5 (1991): 69-88.

-Rowe, William L. “William Alston on the Problem of Evil,” in Thomas D. Senor (ed.), The Rationality of Belief and the Plurality of Faith: Essays in Honor of William P. Alston. Ithaca, NY: Cornell University Press, (1995) pp.71-93.

– Adams, Robert Merrihew & Rowe, William L. The Cosmological Argument. Philosophical Review 87 (3) (1978):445.

-Rowe, William L. The Metaphysics of Free Will. Religious Studies 32 (1) (1996).:129-131.

-Pellegrino, Massimo, and Richard Kelly. “Intelligent Machines and the Growing Importance of Ethics.” Edited by Andrea Gilli. The Brain and the Processor: Unpacking the Challenges of Human-Machine Interaction. NATO Defense College, 2019. http://www.jstor.org/stable/resrep19966.11.

-European Commission, “Building trust in human-centric Artificial Intelligence”, Communication from the Commission to the European Parliament, the Council, the European Economic and Social Committee and the Committee of the Regions, Brussels, 2019, p.8.

– A. Eztioni and O. Etzioni, “Incorporating ethics into Artificial Intelligence”, Journal of Ethics, Vol.21, 2017, p.413.

– Daly, Angela, S. Kate Devitt, and Monique Mann. “AI Ethics Needs Good Data.” In AI for Everyone?: Critical Perspectives, edited by Pieter Verdegem, 103–22. University of Westminster Press, 2021. http://www.jstor.org/stable/j.ctv26qjjhj.9.

– Hoffman, L. Where Fairness Fails: Data, Algorithms, and the Limits of Anti-Discrimination Discourse. Information, Communication & Society,22(7), (2019) 900–915.

-Heyd, D. Supererogation: Its Status in Ethical Theory. Cambridge: Cambridge University Press. (1982)

– Kaplan, Andreas. “Artificial Intelligence (AI): When Humans and Machines Might Have to Coexist.” In AI for Everyone?: Critical Perspectives, edited by Pieter Verdegem, 21–32. University of Westminster Press, 2021. http://www.jstor.org/stable/j.ctv26qjjhj.4.

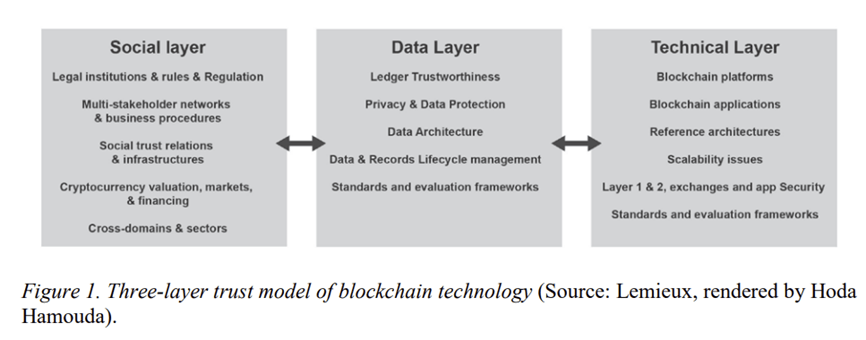

– Lemieux, V.L., Feng, C. Conclusion: Theorizing from Multidisciplinary Perspectives on the Design of Blockchain and Distributed Ledger Systems (Part 2). In: Lemieux, V.L., Feng, C. (eds) Building Decentralized Trust . Springer, (2021). Cham. https://doi.org/10.1007/978-3-030-54414-0_7

-Salah, Alkim Almila Akdag. “AI Bugs and Failures: How and Why to Render AI-Algorithms More Human?” In AI for Everyone?: Critical Perspectives, edited by Pieter Verdegem, 161–80. University of Westminster Press, 2021. http://www.jstor.org/stable/j.ctv26qjjhj.12.

– Blum, Lenore and Manuel Blum. “A theory of consciousness from a theoretical computer science perspective: Insights from the Conscious Turing Machine.” Proceedings of the National Academy of Sciences of the United States of America 119 (2021): n. pag.

– Merchán, Eduardo C. Garrido, and Martin Molina. “A machine consciousness architecture based on deep learning and gaussian processes.” In Hybrid Artificial Intelligent Systems: 15th International Conference, HAIS 2020, Gijón, Spain, November 11-13, 2020, Proceedings 15, pp. 350-361. Springer International Publishing, 2020.